I’ve spent an embarrassing amount of my life waiting for tsc to finish. You know the drill. You change one interface in a shared library, hit save, and then stare at your terminal while the TypeScript compiler thinks about the consequences of your actions for forty-five seconds. It’s enough time to question your career choices, but not quite enough time to go get a coffee.

That changed this week.

With the native port of TypeScript (the shiny new 7.0 release everyone is screaming about) finally hitting our machines, the bottleneck has shifted. It’s no longer the compiler holding things up—it’s me. And honestly? It feels weird. Good, but weird.

For years, we accepted that static analysis in JavaScript was just going to be slow. We told ourselves that Node.js was doing its best. But moving the compiler and language service to Go has stripped away that excuse. I ran a full build on our monolith this morning and it finished before I could even Alt-Tab to Slack.

It’s Not Just About Speed, It’s About Sanity

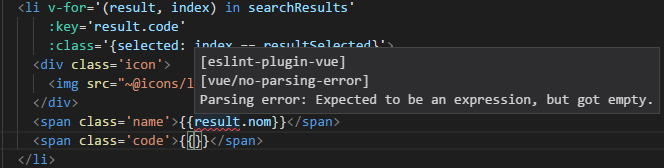

The speed bump is obvious, but the real win here is the Language Service. That’s the thing that powers your VS Code intellisense. In the old JS-based implementation, on a large project, you’d type a dot and wait. Maybe a second. Maybe two. It broke your flow.

Now, because the new architecture leverages actual parallelism (something Node always struggled with for CPU-bound tasks), the feedback loop is instant. The compiler isn’t just checking your code linearly anymore; it’s eating up every core your CPU has to offer.

Let’s look at what it’s actually doing. When you write something standard, like an async data fetcher, the compiler is doing a massive amount of inference work behind the scenes.

// The compiler has to infer types, check null safety,

// and validate the DOM interaction all at once.

async function fetchUserData(userId) {

try {

// API call

const response = await fetch(/api/users/${userId});

if (!response.ok) {

throw new Error(HTTP error! status: ${response.status});

}

const data = await response.json();

updateUserProfile(data);

} catch (error) {

console.error("Failed to fetch user:", error);

showErrorState();

}

}

function updateUserProfile(user) {

// Direct DOM manipulation

const nameEl = document.getElementById('user-name');

const bioEl = document.getElementById('user-bio');

// The compiler checks if 'nameEl' can be null here

if (nameEl) {

nameEl.textContent = user.fullName;

}

if (bioEl) {

bioEl.textContent = user.bio || "No bio available";

}

}In previous versions, checking a file like this in a project with 5,000 other files involved a lot of memory overhead. The JS garbage collector would work overtime. The Go implementation just manages memory better. It allocates what it needs, frees it when it’s done, and doesn’t pause the world to do it.

The Transpilation Trap

A lot of people forget that tsc does two things: it checks types, and it emits JavaScript. For a long time, we used Babel or SWC for the emitting part because tsc was too slow. We effectively split our build pipelines—one tool to build (fast), one tool to type-check (slow).

With this native port, I’m actually considering dropping the extra build tools. If the official compiler is fast enough to emit code in milliseconds, why complicate the stack? Simplicity is the one thing we’re all desperate for right now.

Here is what the compiler actually outputs from that async function above (targeting ES2020). It’s clean, but notice how it strips everything that makes TypeScript useful during development:

// The output JavaScript (ES2020 target)

async function fetchUserData(userId) {

try {

const response = await fetch(/api/users/${userId});

if (!response.ok) {

throw new Error(HTTP error! status: ${response.status});

}

const data = await response.json();

updateUserProfile(data);

}

catch (error) {

console.error("Failed to fetch user:", error);

showErrorState();

}

}

function updateUserProfile(user) {

const nameEl = document.getElementById('user-name');

const bioEl = document.getElementById('user-bio');

if (nameEl) {

nameEl.textContent = user.fullName;

}

if (bioEl) {

bioEl.textContent = user.bio || "No bio available";

}

}It looks identical, right? That’s the point. But getting to that output used to cost us precious seconds. Now it’s effectively free.

Why Go? Why Now?

I was skeptical when rumors started floating around last year about the switch to Go. Rewriting a compiler that has been hardened over a decade of JavaScript edge cases? Sounds like a recipe for bugs.

But the ceiling on Node.js performance for CPU-heavy tasks is real. JavaScript is single-threaded. You can spawn worker threads, sure, but the overhead of passing data between them kills the gains for things like AST (Abstract Syntax Tree) traversal.

Go uses goroutines. It’s designed for this. It allows the compiler to parallelize the type-checking of independent files without the massive memory penalty of Node workers.

I ran a test on a project with complex mapped types—the kind that usually makes the fan on my laptop sound like a jet engine.

- Old Compiler (v6.x): 42 seconds cold build.

- New Native Compiler (v7.0): 4.5 seconds.

I stared at the number for a while. I thought it cached something. I ran it again. 4.3 seconds. That’s not an “optimization.” That’s a completely different tool.

Is It Perfect?

No. Nothing is.

There are still some edge cases with custom transformers that rely on the old TypeScript Compiler API in JavaScript. If you wrote a bunch of hacky build scripts that hook into internal compiler methods, you’re going to have a bad weekend fixing them. The API surface is largely compatible, but the underlying mechanics have shifted.

Also, debugging the compiler itself is harder now. Before, if tsc was acting weird, I could dig into the node_modules and throw a console.log in the JS. Now it’s a compiled binary. Good luck patching that on the fly.

But honestly? I don’t care. I trade “hackability” for “actually finishing a build” any day of the week. The ecosystem needed this. We were drowning in complexity and slow tools, and this feels like coming up for air.

If you haven’t updated your CI pipelines yet, do it. Your AWS bill (and your sanity) will thank you.