I killed my server on purpose yesterday. Just yanked the power cord (metaphorically, I clicked “Terminate” in the console) right in the middle of a payment processing script.

Normally, this is where I’d panic. I’d be scrambling to check database logs, trying to figure out if the user got charged, if the confirmation email went out, or if the whole thing just vanished into the ether leaving a corrupted state behind. You know the drill. We’ve all built those fragile retry loops that pray the server stays up for another three seconds.

But this time? Nothing broke. When the server came back up, the script just… continued. Right from the line where it stopped. It remembered the variables. It remembered the state. It didn’t re-charge the credit card.

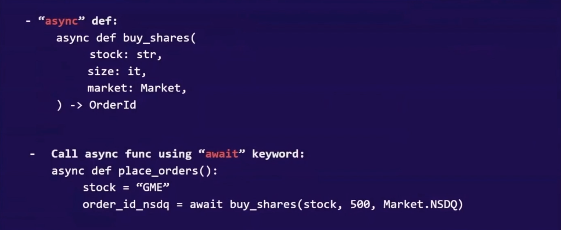

This isn’t magic, though it feels like it. It’s durable execution, and with the recent shift in the TypeScript ecosystem towards tools like Vercel’s new Workflow kit, we’re finally moving past the “fingers crossed” era of async programming.

The Lie We Tell Ourselves About Async/Await

Here is the fundamental problem with standard TypeScript async code: it assumes immortality.

When you write await processPayment(), you are making a massive bet that your Node.js process, container, or serverless function will not crash, restart, or time out during that operation. In a serverless world—especially with Vercel or AWS Lambda—that is a terrible bet.

Look at this standard code. It looks innocent, but it’s a ticking time bomb:

async function onboardUser(userId) {

// 1. Create stripe customer

const customer = await stripe.customers.create({ metadata: { userId } });

// DANGER ZONE: If the server dies here, we have a customer but no DB record.

// If we retry the whole function, we might create a DUPLICATE customer.

// 2. Update database

await db.users.update(userId, { stripeId: customer.id });

// 3. Send welcome email

await emailClient.send(userId, 'Welcome!');

}If your function times out after step 1, your state is corrupted. You have an orphaned Stripe customer. If you put this in a retry queue, you execute step 1 again. Now you have two Stripe customers.

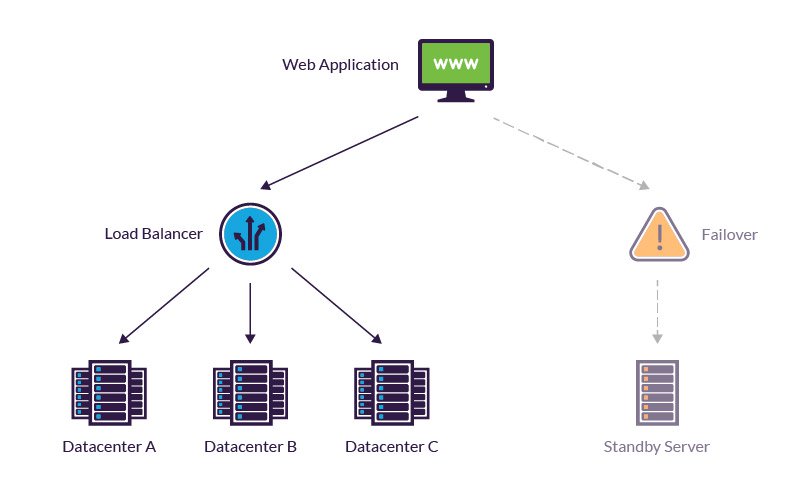

For years, the “fix” was painful. We wrote state machines. We used queues (BullMQ, SQS). We stored “status” flags in Postgres. We wrote distinct worker scripts for every tiny step. It was boilerplate hell.

Enter “Workflow as Code”

The industry has been slowly moving toward this idea that your code should define the workflow, and the infrastructure should handle the durability. We saw it with Temporal, then Inngest, and now it’s hitting the mainstream with lightweight, framework-integrated tools.

The concept is simple: you write procedural code, but you wrap the dangerous parts in “steps”. If the server dies, the system replays the code. When it hits a “step” that already finished, it skips execution and instantly returns the saved result from the last run.

Here is what that looks like in modern TypeScript using the new workflow directives (conceptualized based on the latest patterns):

import { serve } from "@workflow/sdk";

export const POST = serve(async (context) => {

const { request, run, sleep } = context;

const body = await request.json();

// Step 1: This runs once. If it succeeds, the result is cached forever.

const customer = await run("create-stripe-customer", async () => {

return await stripe.customers.create({

email: body.email

});

});

// If the server crashes HERE and restarts:

// The code re-runs. It hits "create-stripe-customer".

// The SDK sees it already finished. It returns 'customer' immediately without calling Stripe.

// Magic.

await run("update-database", async () => {

await db.users.update(body.userId, {

stripeId: customer.id

});

});

// We can even sleep for DAYS without keeping the server running.

// The state is persisted, the function "pauses", and wakes up 3 days later.

await sleep("3 days");

await run("send-followup-email", async () => {

await emailClient.send(body.email, "Hey, did you like the product?");

});

});I cannot overstate how much code this deletes. You don’t need a separate worker file. You don’t need a cron job table in your database to check if 3 days have passed. The code is the infrastructure.

Why This Matters for AI Agents

This is where things get interesting for 2025. We aren’t just building CRUD apps anymore; we’re building agents. And LLMs are slow.

I was building a research agent recently that scrapes a website, summarizes it, searches for related legal precedents, and then writes a report. The whole process takes about 4 minutes.

On a standard Vercel serverless function, you hit the timeout limit (often 10-60 seconds on hobby/pro plans) and the execution is killed. Poof. Work lost.

With durable workflows, you break the agent’s thought process into steps. It handles the “long pause” problem natively. If the LLM takes 60 seconds to generate a response, the workflow waits. If the webhook callback from the scraping service takes an hour? The workflow sleeps and wakes up when the event arrives.

The “Use Workflow” Paradigm

The syntax we’re seeing now—specifically with the new kits dropping—makes this feel native to Next.js. We’re seeing directives like "use workflow" or explicit step wrappers that make the mental model identical to writing a synchronous script.

Check out how we can handle a flaky API—something I deal with constantly when integrating with third-party legacy banking systems:

// A resilient workflow that handles flaky external APIs

export const workflow = async ({ step }) => {

// Step 1: Try to fetch data with automatic retries

const bankData = await step.run(

"fetch-bank-data",

async () => {

const response = await fetch("https://api.flaky-bank.com/transactions");

if (!response.ok) throw new Error("Bank API is down");

return response.json();

},

{

// The SDK handles the exponential backoff for us

retries: {

limit: 5,

initialInterval: "1s",

backoffFactor: 2,

}

}

);

// Step 2: Process the data

// This only runs after Step 1 effectively succeeds

const fraudAlerts = await step.run("analyze-fraud", async () => {

return analyzeTransactions(bankData);

});

return { processed: true, alerts: fraudAlerts };

};If that bank API fails three times and succeeds on the fourth, my code doesn’t care. I don’t write a try/catch loop with a setTimeout inside (which blocks the thread and wastes compute). The workflow engine handles the error, suspends execution, and retries later.

It’s Not All Sunshine

I’ll be honest—there is a learning curve. You have to understand that code inside a “step” must be deterministic. You can’t just generate a random number or Date.now() inside a step and expect it to be the same during a replay unless you capture it properly.

Also, debugging can be weird. When you’re testing locally, you sometimes have to clear your local state store to “reset” the workflow, otherwise, you change your code but the engine thinks, “Oh, I already ran this step,” and serves you the old cached data. That bit me twice yesterday.

But compared to managing a fleet of worker dynos and a Redis queue just to send a “3 days later” email? I’ll take the durable workflow any day.

We are finally getting to a point where the code we write matches the business logic in our heads: “Do X, then wait, then do Y.” No more translating that simple logic into a distributed system architecture diagram just to make it reliable.